using python beautifulsoup

A common problem you could face when using python module requests is getting blocked by websites while extracting informations. In order to protect your privacy and prevent websites from tracking your traffic, you can use free proxies as a bridge for connection.

A free proxy server is a proxy one may use without the need for authentication. You must pay attention to the source of the proxy since it takes your information and re-route it through a different IP address, the proxy server has access to the requests you make. A proxy server thus functions on behalf of the client when requesting service, potentially masking the true origin of the request to the resource server

There are a lot of famous and well-reputable free proxies available. We will be using the free proxies list provided by two famous proxies providers:

- Proxynova: https://www.proxynova.com/proxy-server-list/elite-proxies/

- Hidemy : https://hidemy.name/en/proxy-list/

In this article, we will be extracting free proxies from the websites sited above with two distinct mehods: one with pandas and the second one with Beautifulsoup, then you can use requests to get the informations you need for your purposes.

Let’s import the libraries needed for our analysis:

from bs4 import BeautifulSoup import requests import pandas as pd

1. Let’s work on the free proxies provided by Proxynova

Proxynova provides and maintains up-to-date a list of working proxy servers availables for public use. Their internal tools check many proxy servers daily, with most proxies tested at least once every 15 minutes.

Their proxy list is updated once every minute. The list can be filtered down by a number of attributes such as the port number of a proxy, country of origin of a proxy, and the level of anonymity of a proxy.

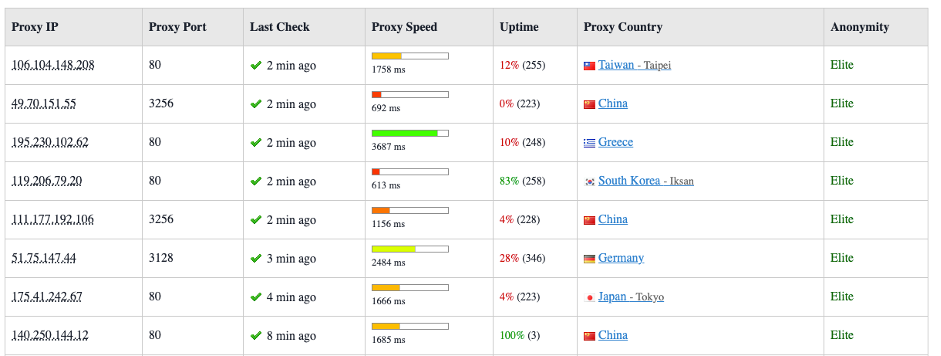

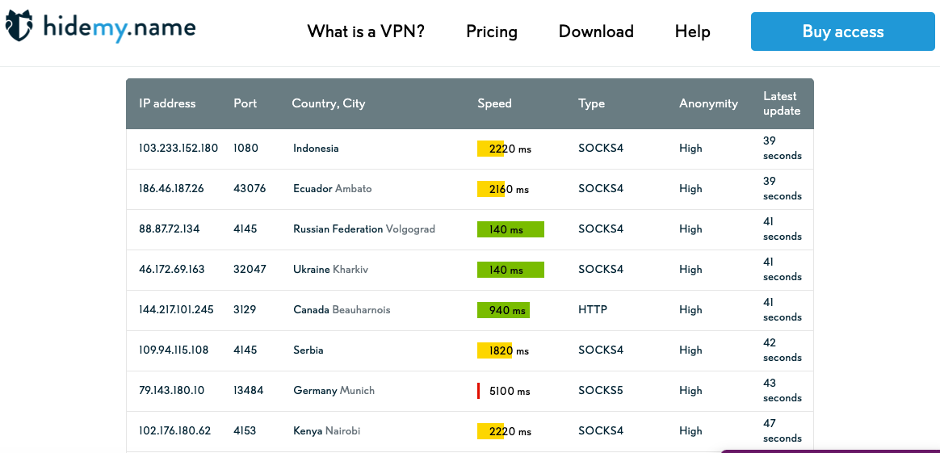

The free proxies list is an HTML table with a the following preview:

The informations are available in a HTML table. pandas.read_html() is a fast and easy-to-use method for getting data from HTML tables:

url='https://www.proxynova.com/proxy-server-list/elite-proxies/' lst=pd.read_html(url) df=pd.DataFrame(lst[0])

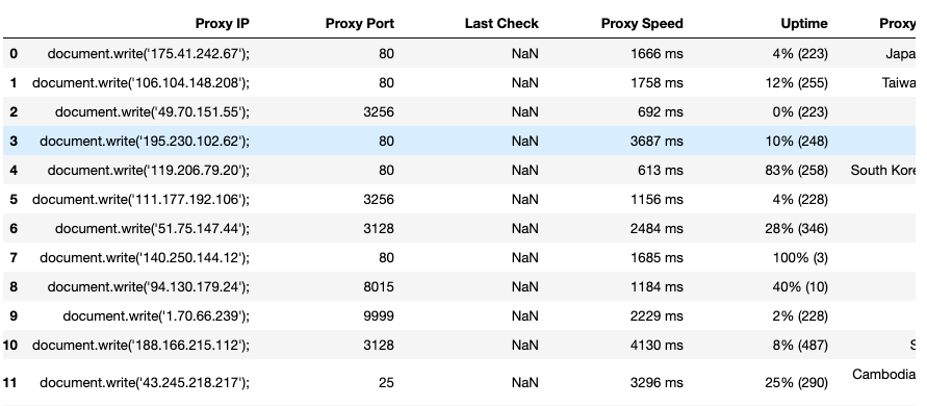

Initial dataframe is as follows:

This dataframe need some readjustment, string replacement and editing before having a well-presented list of free proxies.

df=df[df.columns[:7]].astype(str)

df=df[df['Proxy IP'].str.contains('document.write')]

df.set_index(np.arange(len(df)),inplace=True)

#

lst_val=[]

lst_speed=[]

for row in df.values:

val=row[0].replace("document.write('","")

val=val.replace("');","")

lst_val.append(val)

lst_speed.append(int(row[3].replace(" ms","")))

#

df['Proxy IP']=lst_val

df['Proxy Speed']=lst_speed

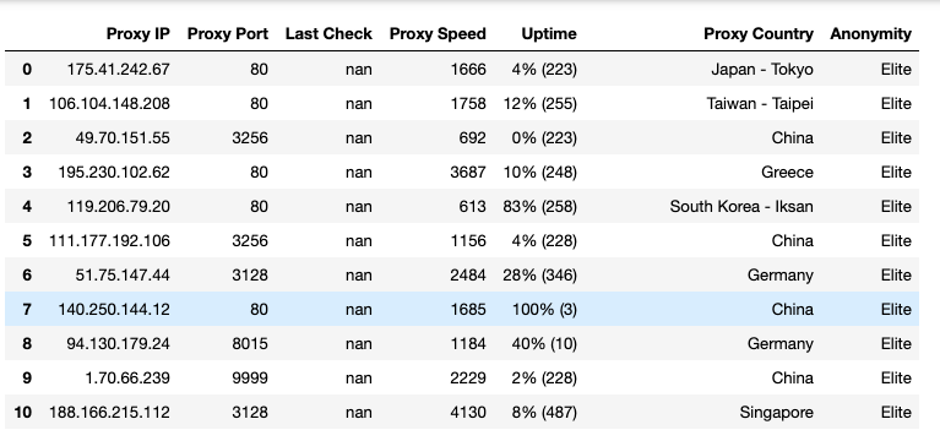

With the above code, we have the final following dataframe with the desired free proxies:

2. Let’s work on the free proxies provided by Hidemy

Hidemy offers a free proxies list of thoroughly and regularly checked for ping, type, country, connection speed, anonymity, and uptime by the number of checks.

We will be using requests combined with Beautifulsoup find_all() method to get the list of the free proxies:

header = {'user-agent':

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10.9; rv:32.0) Gecko/20100101 Firefox/32.0'}

#

r = requests.get(url, headers=header,timeout=3)

data = r.text

soup = BeautifulSoup(data,features="html.parser")

lst=[row.text for row in soup.find_all("td")]

Now that our soup object is well stored, we can construct a dict before building the final dataframe:

dict_ips = {

'Ip':lst[::7],

'Port':lst[1::7],

'Country':lst[2::7],

'Speed':[w.replace(' ms', '') for w in lst[3::7]],

'Type':lst[4::7],

'Anonymity':lst[5::7],

'Latest update':lst[6::7],

}

##

df=pd.DataFrame(dict_ips)

df.drop(index=df.index[0],inplace=True)

df['Speed']=df['Speed'].astype(int)

df['Proxy']=df['Ip']+':'+df['Port']

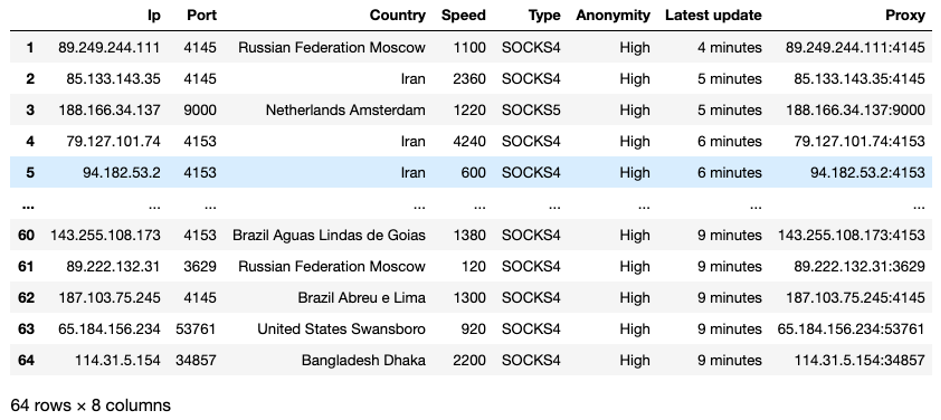

The final dataframe with the list of free proxies is the following:

Free proxies have their uses and there are thousands of lists available with free proxy IP addresses and their statuses. List quality differs from on to another and you may have to use paid proxy services. You need to test these proxies and use them with rotation on a specific frequency since the lists are updated continuously.

We hope this interactive article from yacodata at www.yacodata.com helped you understand the basics getting stocks fundamentals from yahoo finance.

If you liked this article and this blog, tell us about it our comment section. We would love to hear your feedback!